Dr Juan Bernabé-Moreno, Director of IBM Research Europe for Ireland and UK, discusses the development and capabilities of a cutting-edge foundational model that promises to revolutionise climate monitoring capabilities.

Developed by IBM and NASA, with contributions from Oak Ridge National Laboratory, the weather and climate foundational model is aimed at addressing some of the world’s most pressing environmental challenges.

Utilising NASA’s extensive Earth observation data, the project goes a step further by making this information more accessible and usable for the scientific community due to its open-source nature. This model allows researchers to fine-tune and specialise it for specific climate-related problems without the need for massive computational resources.

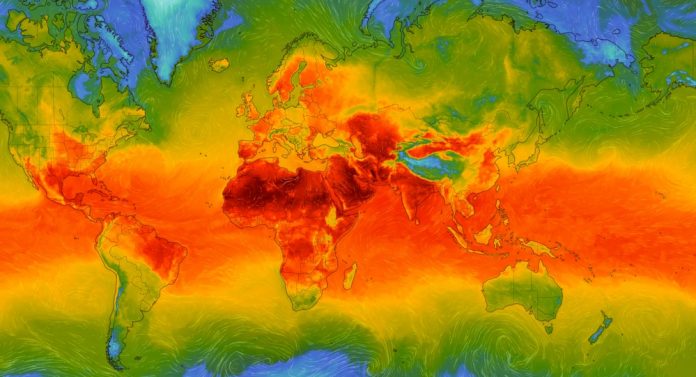

By integrating weather and climate data, the model offers significant improvements over previous approaches, particularly in areas like downscaling for more precise weather predictions and understanding complex atmospheric phenomena like gravity waves. Moreover, the model’s ability to scale from global to local levels makes it invaluable for studying both broad climate trends and specific, localised weather events.

We sat down with IBM’s Dr Juan Bernabé-Moreno to find out more.

What motivated IBM, NASA, and Oak Ridge National Laboratory to collaborate on this model?

Well, this is the second model we’ve developed in this setup. What’s driving all this effort is NASA’s vast collection of Earth observation data. NASA, with its satellites, collaborates with institutions like the European Space Agency and others to curate a vast corpus of data about our planet. This data is available to the scientific community and the public, but just sharing data often isn’t enough. That’s why we wanted to provide not just the data but a foundational model built on top of it so the scientific community could develop their own specialisations of this model to address their specific problems.

For example, if you’re given terabytes of data, training your own model requires significant engineering, computing power, and expertise. With the foundational model, we’ve done the hard work upfront. IBM provides the technology and expertise, Oak Ridge supports the infrastructure, and NASA offers the data and its expertise. Together, we’ve trained this foundational model and made it open-source. Now, if you’re a scientist or researcher, you don’t need to start from scratch. You can use the foundational model and add a small amount of additional data—maybe 500 to 1,000 data points—and with just a few GPUs, you can specialise the model to solve a specific problem.

In weather and climate modelling, this approach has made a huge difference. Take downscaling, for instance. Previously, it could take weeks and required a powerful cluster to handle the complexity. Now, with our model, it can be done much faster, with fewer resources, and with accuracy that competes with or surpasses the current state of the art. Moreover, the ability to do it quickly and cheaply means you can run more scenarios, generating better and broader results.

What were some of the limitations of previous climate models, and what are the advantages of this new one?

One major limitation is scalability. You’re often limited by computation, system data, and the challenge of combining different data sources. These tasks can turn scientific endeavours into complex IT projects. You face computational constraints, data availability issues, and the complexity of integrating multiple data types. On top of that, climate change itself is altering the data and observations, so models built on past experiences struggle with predictability.

Our model lowers the entry barrier significantly, allowing more people to use it. You need experts in multiple fields—meteorology, computational science, and statistical analysis—to address these challenges. By simplifying the process with our foundational model, we enable faster analysis, capture more relationships in the data, and help identify hidden phenomena that might signal extreme weather events in the future. This approach also dramatically speeds things up—what used to take weeks in a large high performance computing (HPC) centre can now be done in hours with just a fine-tuned setup.

The model was trained on 40 years of NASA Earth observation data. How important was this data in improving accuracy?

There are several institutional datasets for weather, like ERA5 from Europe and MERRA-2 from NASA. We chose MERRA-2 because it’s from NASA’s Global Modeling and Simulation Office, and we were working closely with them, so they knew the data very well. MERRA-2 is a multifaceted dataset with around 50 to 65 km grid resolution, 14 atmospheric layers, and many variables such as temperature, humidity, wind speed, and pressure.

It’s an incredibly complex and comprehensive dataset, which made it the perfect starting point for us because of its history, resolution, and completeness. However, we didn’t want to limit our model to just MERRA-2. One of our breakthroughs was the ability to work with different datasets, removing that limitation. For example, you could fine-tune our model with ERA5 data or even your own observations. This flexibility was crucial, especially for applications like renewable energy, where you need asset-level precision that standard grid-based data can’t provide.

Can you elaborate on the specific fine-tuned versions of the model, particularly the climate and weather data downscaling and gravity wave parameterisation? How do they enhance forecasting accuracy?

Downscaling is crucial because climate models, which project up to 100 years into the future, have a coarse resolution—about 160 km by 160 km. This is too broad to capture detailed weather phenomena, which require a resolution of at least 12 km. So, our idea was to take these long-term climate models and downscale them to the finer resolution needed for weather predictions. This helps us better understand extreme weather events in the future.

Because our models are cheaper and faster to run compared to traditional HPC-based approaches, you can generate multiple downscaled models and compare them, offering valuable insights into future weather patterns. This can help scientists, policymakers, and even industries like agriculture and insurance plan for the future.

The other model focuses on gravity wave parameterisation, something our NASA colleagues were particularly interested in. Gravity waves are elusive and difficult to capture using traditional methods. Our model helps better understand the relationship between these waves and specific atmospheric phenomena, but this is more of a scientific challenge with a narrower audience compared to the broader implications of downscaling.

The model can run at global, regional, and local scales. Why is this ability so important?

It’s extremely important. Take El Niño, for example. It’s a global climate phenomenon that forms over months and affects half of the Earth. To understand something like that, you need to model it on a global scale. On the other hand, when you’re tracking something like a hurricane, you need a highly localised model to predict where and when it will hit and with what intensity. The model’s flexibility to scale from global to local means we can study broad phenomena like El Niño and also drill down to the specific impacts of hurricanes, extreme weather, or even wind patterns at a very localised level.

You’ve mentioned that the model is open source. How important is that for advancing research and enabling others to use it?

Open source is crucial. NASA has an open science initiative, and IBM is committed to open-sourcing its models to drive adoption. The model was designed to be open from the start because we want to empower the scientific community to build on top of it. Our goal is to have as many researchers as possible contribute to solving climate challenges, whether it’s improving climate adaptation or resilience strategies.

For instance, we open-sourced an earlier model for Earth observation, and since then, universities, climate institutions, and even defence organisations have created their own applications with it. They’ve provided valuable feedback and shared improvements, which has helped advance the model even further. This kind of community-driven innovation is essential, and with a challenge as big as climate change, no single organisation can tackle it alone. That’s why open source and collaboration are key.

IBM is collaborating with Environment and Climate Change Canada (ECCC) to test the model’s flexibility. How has the model performed?

It’s still early, but we’re seeing promising results. We’ve shared the foundational model with them, and we’re working on tasks like weather nowcasting, combining weather forecasts with high-resolution radar data. The tests show the model’s versatility, and we’re using radar data to help predict precipitation more accurately in the short term. This collaboration reinforces how adaptable the model is, and as we continue working with them, we’re getting new insights that will help us improve the model for the next training round.2

Looking ahead, what do you think the long-term impact of the model will be?

It’s hard to predict the full long-term impact, but we believe these foundational models will converge and create something truly unique. By integrating different datasets—whether it’s Earth observation, weather, or climate data—you can create relationships between disparate types of information. This opens up entirely new possibilities. For instance, you could understand how climate impacts biodiversity in a forest or link weather patterns with renewable energy production. The potential discoveries are vast, and with the model in the hands of the community, the possibilities for addressing challenges like climate change are very promising.

It’s really about institutions coming together to tackle a global challenge. Our relationship with NASA has spanned many years, including work on the Apollo missions. Foundational models and generative AI are incredible enabling technologies. Often, we think of AI in terms of language models or generative applications, but the power of AI extends far beyond that into realms like climate science, where it can really make a difference.

What excites us the most is seeing how the world’s best scientific minds will take this model and do things we haven’t even imagined yet. The technology and the tools are there, but the magic will happen when the community comes together to use it.