Professor Xavier Vilasis-Cardona, from La Salle – Universitat Ramon Llull, explains why computer scientists are so important to the future of high energy physics experiments.

Without engineers, science is just philosophy. This popular T-shirt motto produces 105 million results on a Google search (as of 15 January 2021). It states in a provocative manner the deep relation between the progress of science, regarded as deepening our understanding of Nature, and engineering, being the active use of this understanding. From a very broad point of view, science and engineering work in feedback mode, since new understandings require methods, machinery, and sensing devices provided by engineers, which in turn will allow for new machines, methods, and sensors. High energy physics experiments are probably a paradigm of this feedback loop. Being one of the many extreme frontiers of knowledge, exploring the domain of high energies in laboratories requires unique devices that are engineering challenges in themselves, the Large Hadron Collider (LHC) at CERN and its associated experiments probably being the best examples. Many branches of engineering are at play, including mechanics, electronics, cryogenics, or communications. That is the reason why such experiments require large collaborations gathering thousands of physicists and engineers.1

Computing

Among engineering disciplines, computing makes, however, a special case. Physicists were among the first users of computers in a time when concepts like computer scientist, computer engineer or software engineer barely existed. FORTRAN,2 shorthand for FORmula TRANslation, was the most popular language and physicists were themselves programmers.

CERN was at the heart of the development of key computing technologies such as the World Wide Web. Confident in their abilities, the high energy physics environment continued to develop specific tools to meet their immediate challenges, such as PAW3 or ROOT4 environments, or putting up the Grid Computing System to analyse the data from the LHC detectors.5

In the meantime, computer engineering has experienced an exponential growth in terms of capacities and methods, both hardware and software. Furthermore, computing requirements have grown in such a way that the full expertise of computer scientists has become essential. We have witnessed this process over the last ten years, starting from the need to process the LHC experimental data to obtain precision results, as no explicit manifestation of physics beyond the Standard Model has been observed.

The LHC

The foreseen upgrades of the LHC and its detectors bring the data processing needs to an unprecedented level of difficulty. In this context, the physics community has turned its interest to new computing architectures, standard programming languages, and the renewed Artificial Intelligence techniques. Collaboration amongst institutes and detectors is seen as key to benefit from joint experiences and initiatives such as the Machine Learning in High Energy Physics Community White Paper,6 the Diana-HEP initiative,7 or the COMCHA initiative in Spain.8

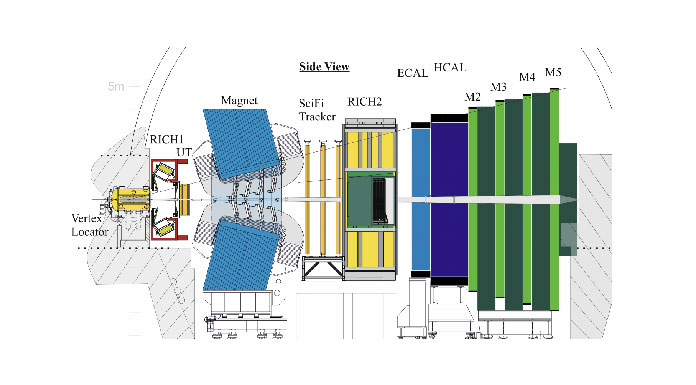

The LHCb detector9 is a very good example to highlight the magnitude of the challenges and the solutions provided, combining all the best expertise of physicists, computer scientists, and software engineers. LHCb is an experiment designed to study the violation of the charge-parity symmetry and to search for phenomena not explained by the Standard Model of particle physics from the decay of particles containing beauty and charm quarks produced in the proton-proton collisions of the LHC. The detector started taking data in 2009 and is currently undergoing a major upgrade to continue its physics program under the new operating conditions of the LHC, to start in 2022.10 The foreseen collision rate of the LHC and the structure of the detector sensors (see Fig. 1) will be delivering an unprecedented data rate of 40Tb/s. From this data rate, the trigger system will be in charge of selecting the potentially relevant events to be analysed in order to extract physics results.

Actually, the definition of what a potentially relevant event is may change during the operation of the detector, so the trigger system will have to be flexible enough to adapt to the new requirements. The existing standard trigger architecture is divided into a simple and fast hardware trigger and a flexible, but slower, software one. This would not be sufficient to meet the needed combination of precision and flexibility in the selection of events. For this reason, a full software trigger system is required.11 The full software processing of the 40Tb/s data flow in a single computer farm is a major challenge, especially considering the availability of a limited budget. The cornerstone of any effective solution is professional coding, using modern and efficient programming techniques, particularly aiming at the efficiency of the algorithms and the code itself.

Solutions

Two viable solutions have been developed. The first one is a more conservative approach from the hardware point of view, using a computing infrastructure inheriting from the previous one. This is a mandatory baseline solution to prove the feasibility of the detector upgrade.12 The second, more daring, solution results from the vision and the joint work of physicists, software engineers, and computer scientists. It consists of using general purpose graphic processing units (GPUs) to perform a part of the tasks. GPUs have evolved exponentially, pulled from the videogame industry to become an essential hardware architecture in modern computation. They are key to the actual success of machine learning techniques that solve problems like protein folding.13 This innovative solution is named Allen, after Frances Allen, one of the pioneers of high-performance computing.14 A GPU solution allows for important savings in the budget and adds power and flexibility to the trigger for the years to come.

The HiLumi LHC

In the near future, the general purpose LHC experiments, ATLAS and CMS, will also have to face major upgrades to adapt to the next level of the LHC, the so-called High Luminosity phase, at the end of the 2020s. The LHCb upgrade is demonstrating potential solutions to the high data rates in terms of hardware and software and insists on the key role that computer scientists and software engineers play in the success of particle physics experiments.

From a researcher’s perspective, research groups from engineering and computer science schools, mixing physicists and computer scientists, such as the Data Science for the Digital Society Research Group at La Salle – Universitat Ramon Llull, have thrilling opportunities to make major contributions to particle physics experiments and to the exploration of the high energy frontier. Furthermore, high energy physics collaborations need to take a deep look at how computer science has thrived under the pull of other disciplines and start enjoying the benefits. Deeds can only stand on deep collaboration.

Acknowledgements

This paper has been supported by MINECO under grant FPA2017-085140-C3-2-P.

References

- See The Journal of Instrumentation release 3 of 2008 devoted to the LHC and its main experiments, 2008 JINST 3

- John Backus, ‘The history of FORTRAN I, II and III’ (PDF). Softwarepreservation.org. Retrieved 19 November 2014

- PAW, Physics Analysis Workstation, http://paw.web.cern.ch/paw/ (retrieved on 2021-01-14)

- Rene Brun and Fons Rademakers, ‘ROOT – An Object Oriented Data Analysis Framework’, Proceedings AIHENP’96 Workshop, Lausanne, Sep. 1996, Nucl. Inst. & Meth. in Phys. Res. A 389 (1997) 81-86

- The Worldwide LHC Computing Grid (WLCG) https://home.cern/science/computing/grid (retrieved on 2021-01-15)

- Kim Albertsson et al., ‘Machine Learning in High Energy Physics Community White Paper’, J. Phys.: Conf. Ser., 2018, 1085 022008

- Diana-HEP https://diana-hep.org (retrieved on 2021-01-15)

- Martínez Ruiz del Árbol, ‘COMCHA: Computing Challenges for the HL-LHC (and beyond)’, presentation at the XI CPAN Days in Oviedo (Spain), October 2019

- The LHCb Detector at the LHC, 2008 JINST 3 S08005

- Framework TDR for the LHCb upgrade – CERN LHCC 2012-17 LHCB-TDR-012

- Computing Model for the LHCb upgrade TDR – CERN LHCC 2018-14 LHCB-TDR-018

- LHCb Trigger and Online Upgrade TDR — CERN-LHCC-2014-016 LHCB-TDR-016

- ‘AlphaFold: a solution to a 50-year-old grand challenge in biology’. https://deepmind.com/blog/article/alphafold-a-solution-to-a-50-year-old-grand-challenge-in-biology (retrieved the 2020-01-15)

- LHCb upgrade GPU High Level Trigger TDR – CERN -LHCC 2020-006 LHCb TDR 21

Please note, this article will also appear in the fifth edition of our quarterly publication.