Dr François Bouchet, Research Director at the French National Centre for Scientific Research (CNRS), was one of the pioneers of the European Space Agency’s Planck project and the scientific co-ordinator of the ‘Planck-HFI’ consortium. Here, he outlines the history and outstanding success of the Planck project, Europe’s first mission to study the Cosmic Microwave Background (CMB).

In the spring of 2020, the very last paper of the Planck-HFI’ consortium was given the go-ahead for publication in the Astronomy & Astrophysics journal, concluding an endeavour started in 1992 with a proposal to the European Space Agency (ESA). After detailed studies, this proposal led to a formal selection by ESA in 1996, for a launch then envisaged in 2003. The launch happened from Kourou, together with the Herschel Satellite, in 2009 after several twists and turns quite typical for ambitious space projects. And indeed, the Planck project was quite ambitious since the aim was to provide the definitive map of the anisotropies of the cosmic microwave background (CMB). The sky emission varies as a function of the direction of observation of the CMB, and these variations bear the imprint of the state of the Universe 13.8 billion years ago, when matter and light last interacted. Reaching this goal required several technological breakthroughs to succeed. As we shall see, all hurdles were passed, the goal was accomplished (and even vastly exceeded) delivering one of the greatest success ever seen in European science.

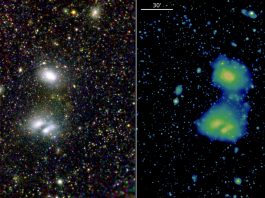

Over the last century, cosmologists have progressively gathered a lot of information on the Universe and established a model of its history which accounts very well for a myriad of observations. Planck results are the current crowning jewel of this quest. We now know that the Universe is expanding, and that large-scale structures grew from tiny fluctuations under the influence of gravity, which pulls matter to regions which were initially slightly overdense. On the contrary, matter is evacuated from slightly underdense regions and piled progressively in thin shells around these underdense regions, flowing next to the filaments at the intersection of shells, and then along the filaments to clusters of galaxies residing at the intersection of three filaments. This defines a series of structures of progressively larger density contrast, from voids to sheets to filaments to clusters, akin to the frame of a sponge. Galaxies naturally form preferentially where there is more matter.

How can we possibly explore the state of the Universe 13.8 billion years ago?

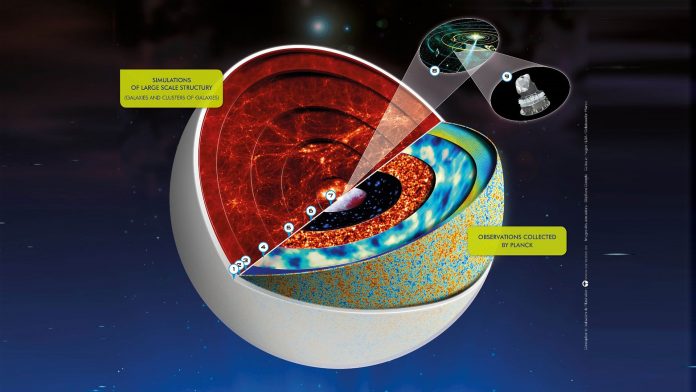

One crucial element to remember is that while the speed of light is very large (~300,000 km/s), it is nevertheless finite, thus we see remote objects as they were when their image started travelling to us, e.g., the Sun appears to us as it was eight minutes ago. The further away we look, the more we peer into the past of the Universe. This ‘time machine’ allows us to literally see the formation of large-scale structure at work (as long as the emitting objects are not too remote and dim). This is illustrated in the top part of Fig. 1, where the structures within each shell are progressively weaker towards earlier times.

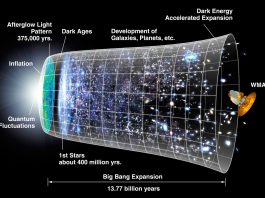

Another element to remember is that since the Universe is expanding, it was denser and therefore hotter during earlier times. When we foray far enough away, we end up exploring times before any object had been formed (the ‘dark ages’), and when matter was quite hot and dense. So much so that, at sufficiently early times, the content of the Universe was in a luminous state called a plasma, where electrons were not bound to nuclei (mostly hydrogen nuclei, since this is before stars began to synthesise heavier elements). In that state, light and matter are tightly coupled through constant interactions between the electrons and the photons, and the Universe is brightly opaque, like fog illuminated from the inside. This very early phase, before about 380,000 years after the Big Bang, ended when the expansion of the Universe caused the plasma to cool, the atoms to form, and the primordial light to become decoupled from matter.

Anisotropies of temperature

At that moment, the seeds of large-scale structures, the tiny fluctuations of density and velocity (which seed the formation of later objects), left their imprint on the primordial light which then travelled uninterrupted to us. These imprints amount to small temperature fluctuations in the blackbody radiation left over from the Big Bang, known as ‘anisotropies of temperature’. They were first detected in 1992 by the COBE satellite from NASA, and Georges Smoot, the principal investigator of the discovery instrument, was awarded the Nobel Prize in Physics in 2006. This discovery was precisely what prompted us to propose to ESA that same year what later came to be called the Planck satellite project.

These anisotropies are a central tenet of cosmology. First, because their existence was a key prediction of the Big Bang model with its gravitational growth of structure from tiny primordial seeds. Second, because the specifics of these CMB anisotropies reflect the characteristics of the primordial seeds and the Universe content at that time. In fact, they offer access to all that determines the content, evolution, and fate of the Universe, at least as long as the cosmological model remains an adequate description of all that we see. It was then very natural to follow up the discovery work of COBE with observations which could reveal finer details and thus a lot more information, mostly inaccessible by any other means.

COBE had an angular resolution of only 10 degrees, the sensitivity of its detectors was barely sufficient to make a detection of the anisotropies, and only low frequencies, below 100 GHz, could be measured, giving little handle on low-level contaminants from foregrounds emissions. Guided by theory, the year the COBE discovery was announced we boldly proposed to build the ultimate experiment, which would exhaust the information content of the temperature anisotropies by measuring with great sensitivity all these anisotropies from the largest scales down to scales (around 1/10 of a degree) where they become dominated by other non-primordial signals. The experiment would also open the window to frequencies higher than 100 GHz.

Planck’s High Frequency Instrument

This completely new window proved to be very favourable to pursue this endeavour and control the galactic foregrounds emissions, to the point that most cosmological results from Planck were deduced from measurements in this window by the dedicated instrument called the HFI (High Frequency Instrument). Rather than being an evolution of known technologies like that deployed on COBE (and later by WMAP and the Low Frequency Instrument, LFI, aboard Planck), the Planck-HFI was designed around a new type of detector, utilising bolometers built by Caltech, promising access to this window, together with much better sensitivity. The downside of using this new sensitive technology was that the bolometers need a very low and stable operating temperature, one-tenth of a degree above absolute zero, i.e., 0.1 Kelvin (or -273.05°C), with temporal fluctuations one thousand times smaller, around a few milli-Kelvin, for several years in a row.

This daunting task was made possible thanks to the invention at the Néel Institute in Grenoble, France, of a completely new type of 0.1K cooler, by using a capillarity along thin coiled tubes to mix two isotopes of helium in a reduced gravity environment. For several years, Planck was destined to become the coolest point in the Universe outside of Earth (at least barring undiscovered technologically advanced civilisations). The requirement of measuring fluctuations at the level of one microkelvin (one millionth of a degree) led to numerous other challenges, such as computing and measuring the response of the detectors to (the very weak part of) the incoming radiation from way off-axis. In fact, one can think of Planck as three intertwined chains: optical, electronic, and cryogenic, with each chain often having conflicting requirements with the others, e.g., on mapping speed. And to top it all, ESA had to send to and operate this satellite from the second Lagrange point of the Earth-Sun system, about a million kilometres from Earth, a premiere for ESA. But all went superbly well, and HFI cryogenic operations could even exceed the nominal duration by nearly a factor of two. The last command was sent on 23 October, 2013.

New challenges

As soon as the data started reaching the ground, another challenge started – building maps from the temporal time streams and characterising them, which meant understanding the instruments at a level of detail rarely if ever required for a remote experiment. We were greatly helped by the many years of preparation at the Data Processing Center. As I used to insist for years before launch, our goal was to be fully operational from day one, meaning that we would therefore only be left with the unexpected. And indeed, we started visualising the maps as soon as the data started to flow in.

However, we did have to cope with unexpected amounts of cosmic rays, as well as the implications of an insufficiently characterised part of the electronic chain, which made the collected data essentially useless, or at least much less interesting than hoped. But we were saved by the great redundancy of the about 1,000 billion time-samples, which provided us with many renderings of the same pixels of the sky, as well as by the hard work and ingenuity of about 250 people for about a decade.

Data releases and analyses

The first data release took place in March 2013, based on the intensity data from the nominal mission duration of 14 months. A second release completed by August 2015 encompassed all the collected data and had a first attempt at the analysis of the much weaker polarisation signal. Finally, in August 2018 we started to deliver a refined legacy release, with the last element finally completed in March 2020, 28 years after the initial proposal.

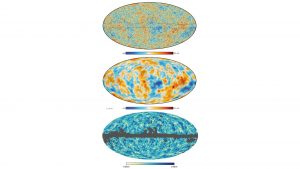

The first part of the data processing work was to obtain maps of the intensity and the polarisation of the sky emission in nine different frequency bands, spanning the range between 30-800 GHz, providing the possibility to separate different sky emissions with different spectral behaviours. For instance, the synchrotron emission from our own galaxy dominates at low frequencies and becomes quite weak as compared to the CMB anisotropies when the latter dominate, around 100 GHz. Conversely, the thermal emission of the dust of our galaxy dominates at high frequencies and becomes relatively weak at around 100 GHz (at least when not looking through the Galactic plane). We therefore created maps with about one billion pixel values in total from which we extracted the foreground-cleaned anisotropies maps shown in Fig. 2.

The intensity and polarisation maps of Fig. 2 are the imprint of the primordial fluctuations which initiated the development of complexity in the Universe, ultimately leading to galaxies and their stars which spat out the heavy elements from which we arose. The third panel gives an idea of the distribution of mass in the Universe, which we deduced from its light bending effect on the two previous ones. But these visuals do not do justice to the most important part of the maps: their metadata characterising how the image quantitatively relates to the actual sky. This allows us to determine extremely well how the instruments distort the sky emission and add noise on top of it, to correct for it at best, and to identify and carry through all of the uncertainties in the process, since this will ultimately propagate to the uncertainties of the accrued cosmological understanding of our Universe (or misguide it, if this is not done properly).

It turns out that such characterisation can only be done completely through very demanding numerical simulations. We therefore created thousands of simulated Planck data sets, processed like the real data, and in the process we used up tens of millions of CPU hours! This created demand which was unheard off in other space experiments, and funding agencies took a lot of convincing that this was indeed necessary. The simulation bottleneck is now understood, and here to stay for all future complex cosmology endeavors.

The history of the Universe

Of course, these CMB maps do not directly tell us the history of the Universe. We need to characterise them mathematically and confront the result with a priori theoretical models. One image may prove useful. We can think of a CMB anisotropy map as a map of the surface of a planet covered with oceans, with the colours coding the relative height of the surface in different locations. A most revealing transformation would be to decompose the height variation in a superposition of waves of different height and wavelengths. From that we could then assess the distribution of the heights of waves of a given wavelength, and in particular extract the typical height of a wave as a function of its wavelength, or rather of its inverse, its multipole. This is what we actually do for temperature anisotropies, and the top panel of Fig. 3 shows the result, known as the power spectrum of the anisotropies. A similar process can be performed for polarisation1, the cross-correlation of intensity and polarisation, or the lensing effect, which are shown in the other panels of Fig. 3. In all cases, this amounts to a characterisation of the typical strength of a signal versus scale.

Once a power spectrum has been measured, it is akin to having a digital imprint of the early Universe and we can confront it with an atlas of pre-computed predictions under various hypotheses. Several things may result from this matching process. It may turn out that none of the models fit within the acceptable range of uncertainties. That is, we would have proved that all our earlier ideas were wrong! Or at least incomplete. The other possibility would be that several models would match, given the uncertainties. Of course, the tighter the uncertainties, the less residual degeneracy, i.e., indistinction between possibilities. We found that the so-called ‘Tilted-Lambda-Cold-Dark-Matter’ (LCDM) provides a perfect statistical match, provided its parameters have certain values which are determined at the per cent level of precision! This model’s predictions, for the best fitting parameters, are given by the blue curves of Fig. 3, which perfectly describes the data.

It is worth noting that the relatively large uncertainties at low multipoles (large scales) are entirely due to the fact that we have only one sky to observe, and thus a relatively small number of very large-scale anisotropies to average over and compare with the mean prediction of models. Otherwise, the uncertainties due to measurement noise are so small in temperature that they are barely visible at a multipole greater than 100, i.e., for angular scales smaller than a few degrees. As a result, no future experiment will improve the Planck intensity spectra for cosmology, and all the information was mined, as pledged at the time of selection. One can nevertheless discern an increase of the uncertainties at large multipole (>~1500) due to the detector noise, which is more limiting for the polarisation and lensing power spectra. This is where much more information on the CMB still lies, and the object of ongoing and future projects.

The ‘Tilted-Lambda-Cold-Dark-Matter’

The ‘Tilted-Lambda-Cold-Dark-Matter’ has only six parameters, two to describe the amplitude of the primordial fluctuations versus scale (i.e., of the initial seed fluctuations themselves, not their CMB imprint), three to describe the content of the Universe and its expansion, and a ‘nuisance parameter’, which describes in an ad hoc way how the ignition of the first stars slightly modifies the spectra. Amazingly, these six parameters are enough to determine the imprint of the primordial fluctuation on the cosmic fireball. And with these parameters in hand, we can now predict everything which follows in time, the growth of complexity, and even the fate of the Universe, and we can confront them with other cosmological observations made around us, 13.8 billion years later.

Before turning to that, it is worth stressing that the simplicity of the LCDM model is somewhat deceptive. Indeed, it builds upon the two primary theories of physics, quantum mechanics and general relativity, together with far reaching assumptions, many of which were directly tested with Planck data. To mention a few major ones:

- Physics is the same throughout the observable Universe

- General Relativity (GR) is an adequate description of gravity (even on scales where it cannot be tested directly)

- On large scales, the Universe is statistically the same everywhere (initially an assumption, or ‘principle’, but now strongly implied by the near isotropy of the CMB)

- The Universe was once much hotter and denser and has been expanding since early times

- There are five basic cosmological constituents (dark energy, dark matter, normal matter, the photons we observe as the CMB, and neutrinos that are almost massless)

- The curvature of space is very small

- Variations in density were laid down everywhere at early times, nearly scale invariant, but not quite, as predicted by inflation

All the above is combined in a clockwork fashion to produce the theory predictions. And departures from these assumptions can only be very small, given the Planck-HFI data.

One of the primary results of Planck has been finding that the amplitude of primordial fluctuations is not exactly the same at all scales, as determined by one of the six parameters measured. This essential effect was predicted in the 1980s by two Russian cosmologists, Viatcheslav Mukhanov and Gennady Chibisof; they were studying the generation of primordial fluctuations at very early times through irrepressible quantum fluctuations of the vacuum, expanded to cosmological scales during an exponentially fast expanding phase known as ‘inflation’. This deviation from scale invariance derives from the finite duration of the inflationary phase and is one of the most discriminating characteristics of the various models of inflation. The catch was that this is a tiny effect, which could only be established securely with the advent of the Planck data.

Precision and confidence

One may wonder whether the stated precision of the determination of the fundamental cosmological parameters is not misleading. Indeed, what if one of the hidden assumptions in the standard (or base) LCDM model is broken? For instance, the base model assumes that the variation of the primordial fluctuations with scale is simply described by a one-parameter function (a simple power-law). The base model also assumes a standard content of the Universe given known particle physics, and a flat spatial geometry. Each of these can be broken in possible extensions/generalisations and many can be characterised by introducing a single extra parameter in the model; one then computes the new predictions to enrich the atlas of potential signatures before confronting them with the data.

The results are graphically given in Fig. 4, which shows the acceptable range of seven of these new parameters, in conjunction with the modified constraints of the base LCDM parameters. One can then assess such effects at three levels of precision, considering the data pre-WMAP, post-WMAP, and post-Planck. At the level of Planck’s precision, the base parameters are mostly unaffected by these new degrees of freedom, which demonstrates the resilience of key results to the introduction of these extensions.

The two-dimensional cuts through the now seven-dimensional parameter space of Fig. 4 also allow us to gauge the massive reduction in the allowed range of values which the CMB data has achieved. In fact, one may compute that the relative post-Planck reduction of the volume of allowed space as compared to COBE is more than 1010 for LCDM! And for the largest model spaces, having four or five additional dimensions compared to ΛCDM, this improvement is more than 1016 in 26 years, corresponding to a six-month doubling time, three times faster than Moore’s Law! This power, combined with the transparency of why we get these constraints, their intelligibility, is what makes the CMB so unique.

To recap: one hundred million values of Planck CMB map pixels and their characterisation (extracted from about 1,000 billion time samples) can be summarised by precise constraints on the six parameters of a ‘simple’ model, with no need to introduce further physics. Once calibrated on Planck data, we found that the model predictions are consistent with the results from almost all other cosmological probes, spanning 14 Gyr in time and three decades in scale, from the nuclear abundances of ordinary matter to the spatial distribution of galaxies. This is an impressive testament to the explanatory power of LCDM.

Remaining questions

Is this to say that everything is in order and cosmology is over? Of course not. Despite these successes, some puzzling tensions and open question remain. While many measures of the matter distribution around us are in excellent agreement with the predictions of ΛCDM fit to the Planck data, this is not true of all of them. In particular, the value of the expansion rate determined though local measurements remain discordant with the inferences from the LCDM model calibrated on Planck. One needs to determine whether this tension points to unlikely statistical fluctuations, misestimated systematic uncertainties, or physics beyond ΛCDM. And multiple questions remain. For example:

- What is the exact mechanism for the generation of fluctuations in the early Universe?

- If it is inflation, as we suspect, what determines the pre-inflationary state, and how does inflation end?

- What is dark matter?

- Are there additional, light, relic particles?

- What is causing the accelerated expansion of the Universe today? and

- How do astrophysical objects form and evolve in the cosmic web?

Absent a breakthrough in our theoretical understanding, the route forward on all these questions is improved measurements, in which further observations of CMB anisotropies will play a key role.

Given that Planck-HFI has effectively mined all the information in the primary temperature anisotropies, the focus of CMB research is now shifting to studies of polarisation and secondary effects such as CMB lensing. One particularly pattern of polarisation is particularly being sought since it would betray the detection of the primordial gravitational waves created during the inflationary phase. Such a detection would have very wide implication for fundamental physics and offer a unique experimental window on quantum gravity, the high energy unification of quantum mechanics and general relativity, which is necessary in order to understand the most extreme phenomena of our Universe.

Sadly, however, Planck’s achievement will not be repeated, and it seems that Europe will at best only help others unravel the deepest mysteries of our primordial Universe.

References

- Polarisation is often characterised by two numbers, giving the direction of polarisation and the fraction of the light which is polarised. But it can also be decomposed into two modes or patterns, so-called ‘E’ and ‘B’, each characterised by a single number. At the level of Planck’s sensitivity, only the E-modes can be detected, and this is what this article concentrates on, in particular for the power spectrum

Dr François Bouchet

Research Director

The French National Centre for Scientific Research (CNRS)

The Institut d’astrophysique de Paris

+33 (0)1 44 32 80 95

bouchet@iap.fr

Tweet @CNRS

Tweet @astroIAP

www.esa.int/Enabling_Support/Operations/Planck

www.iap.fr

Please note, this article will also appear in the third edition of our new quarterly publication.