Jason Swedlow, Open Microscopy Environment, University of Dundee, UK, discusses publishing big image data online.

Access to primary research data is vital for advancing science. It facilitates the validation of existing observations and provides the raw materials to build on previous work. In the last 20 years the availability of public resources that hold reference genomes from many different organisms and structures of proteins and other biomolecules have become the bedrock of all life sciences and biomedical sciences. These resources function as large, sophisticated databases, that often require large international collaborations to engineer and maintain.

In the past, research communities have collaborated to build these resources and all the tools that allow public submission and access to particular types of datasets. The result is that there are now public scientific repositories for gene sequences, protein structural data, and gene and protein expression profiles. In each of these cases the scientific community united to standardise the structure of the data and its associated metadata, and to create centralised repositories to facilitate deposition, promote discoverability, and ensure the longevity of the data the community had collected and need to share to enable the next round of discoveries.

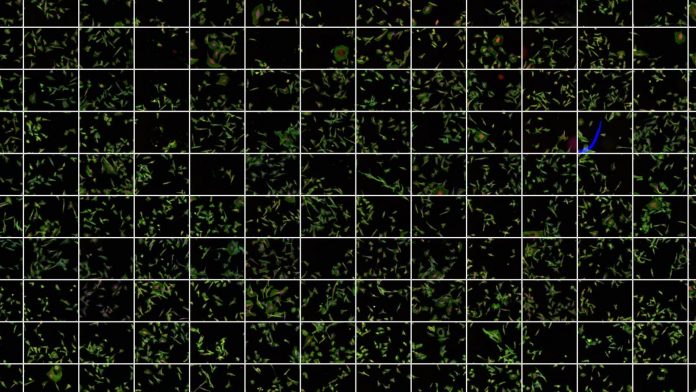

Until recently, similar resources have not been available for image data. This is a problem, because much of the published research in the life and biomedical sciences is based on imaging. Images of cells, tissues and organs grace our newspapers and magazines, but these are different from the images we record on our mobile phones — scientific images are quantitative data, which means the values at every pixel are measurements of the concentrations or interactions of molecules inside cells and tissues.

Imaging datasets are routinely used for quantitative measures of biological processes and structures that are the basis for Life and are therefore a critical component of many of the results published in peer-reviewed life sciences journals. However, a single scientific image can have millions or even billions of pixel measurements, so imaging data are often large and unwieldy. The sheer size and complexity of image datasets makes it hard to routinely share or publish.

In almost all cases images are presented in published articles in processed, compressed formats that do not accurately convey the quality and complexity of the original image data. Those original data are housed in thousands of individual labs in hundreds of different file formats. The data are difficult for researchers to share and in practice impossible to publish.

IDR: A prototype image data resource

To demonstrate the capability and utility of publishing complete scientific image data, a collaboration of scientists and software developers at the University of Dundee, EMBL-EBI, Cambridge and the University of Bristol (all UK) have built the Image Data Resource (IDR), and populated it with community-submitted image datasets, experimental and analytical metadata, and phenotypic annotations. The current version of this resource is built and deployed on EMBL-EBI’s Embassy cloud resource at https://idr.openmicroscopy.org.

IDR currently holds ~47 terabytes of image data in ~37 million images, and includes all associated experimental (e.g., genes, RNAi, chemistry, geographic location), analytic (e.g., data submitter-calculated regions and features), and functional annotations, wherever possible using ontological identifiers. IDR holds imaging data on human cells (e.g., http://goo.gl/1zoIIk), Drosophila (http://goo.gl/jPfM3j), and fungi (e.g.,http://goo.gl/yFPQCw; http://goo.gl/n3ix5v). IDR also holds imaging data related gene transcription studies in plants (https://goo.gl/eeQ1cE) and imaging data from the Tara Oceans project, a global survey of plankton and other marine organisms (http://goo.gl/2UWWnj).

Recently, IDR published a collection of human cardiac biopsy images that can be used as benchmark datasets for developing advanced deep learning algorithms that can aid the diagnosis of human disease (https://goo.gl/szES7E).

By making all these data publicly available, IDR provides a working example of technology that makes imaging data FAIR— Findable, Accessible, Interoperable and Re-usable.

Cloud-based analysis

The datasets in IDR range in size from gigabytes to terabytes, so it is not practical to expect users who want to analyse IDR images to simply download the data. Therefore, we have added a cloud-based Virtual Analysis Environment (VAE) that users can log into and run their own analyses on IDR data (https://idr-analysis.openmicroscopy.org/).

This opens up the opportunity for using IDR data in new ways that the original data generators never thought of – which is exactly the reason why scientific data is made publicly available.

An open source foundation

IDR is based on open source OMERO and Bio-Formats software platforms, built by OME (https://www.openmicroscopy.org), an international consortium building open tools for handling scientific imaging data. The software used to construct IDR and annotate its datasets is open source and publicly available. This means that other projects can re-use IDR technology to handle their own data. In the longer term it may be possible to link multiple IDR sites into a much larger, distributed resource.

Capabilities and Impact of IDR

IDR assembles and integrates imaging datasets from independent scientific studies. IDR focuses on reference image datasets – those data that are richly annotated with molecular and/or phenotypic information that extend the value of the images and make it possible to connect data between datasets.

For example, with IDR’s annotations, it is possible to find all images of cells where the PTEN oncogene is perturbed (https://idr.openmicroscopy.org/mapr/gene/?value=PTEN) or where the anti-cancer drug paclitaxel is used (https://idr.openmicroscopy.org/mapr/compound/?value=Paclitaxel). Using IDR’s VAE, it is possible to combine and customise such queries and build much more sophisticated analyses.

One such direction for future analysis involves the application of deep learning tools to biological and biomedical imaging. Using these tools to automatically assign an image to a specific class based on phenotype, content or other image characteristics is a major opportunity for future use of IDR. IDR’s comprehensively annotated datasets can serve as training data for many different applications. To catalyse this type of use, we will begin identifying and recording the location of individual cells in the IDR datasets in 2018. All identified cells will be available through the IDR API and queryable based on reporters, experimental parameters and calculated features.

Building an image data ecosystem

IDR is in production but is still an early step in the construction of a mature image data publishing research infrastructure for the bioscience community. IDR integrates and connects independent datasets and is therefore classed as an added value database or a knowledgebase, according to established definitions of public data resources.

The IDR project is working with Euro-BioImaging (http://www.eurobioimaging.eu/), the European Research Infrastructure for Biological and Biomedical Imaging, to build and deliver robust, sustainable services for imaging data. In the long-term this will mean the establishment of central archives for imaging data that hold imaging datasets related to all published bioimaging studies and provide scalable, streamlined routes for data submission.

A white paper outlining the vision for a public image data ecosystem is available (https://arxiv.org/abs/1801.10189). In the long-term, institutions that provide centralised data archiving and processing, database and tool developers, computational scientists and experts in imaging applications will need to contribute technology, knowhow and domain knowledge to build the complete ecosystem.

Outlook and opportunities

The construction and use of biomaging data resources is just beginning and will take 5-10 years to fully mature. Our work on IDR has demonstrated that TB-scale datasets can be routinely submitted, annotated, and published, and public tools for data analysis and querying can be deployed and used at scale.

We are at the beginning of the full development of these resources, and already it is clear that they should be conceived, funded and run in connection with national or trans-national infrastructures with a strong connection to the data submission and user communities.

This is the best way to ensure the establishment of truly useful and usable resources while also providing national and trans-national routes for sustainable funding.

Jason R Swedlow and the OME Consortium

Open Microscopy Environment

University of Dundee

Dundee, UK

+44 (0) 1382 385819

j.swedlow@dundee.ac.uk